Introduction

The rise of online gambling 747 Live Casino

The 21st century has witnessed an unprecedented surge in the popularity of online gambling, transforming the way people engage with games of chance and skill. Fueled by the rapid advancement of digital technology and widespread internet accessibility, online gambling platforms have emerged as virtual hubs for casino enthusiasts and bettors worldwide. The allure of convenience, the broad spectrum of games, and the ability to participate from the comfort of one’s own home have propelled this industry to remarkable heights. With virtual casinos, poker rooms, sports betting platforms, and more just a click away, the rise of online gambling has reshaped the traditional gambling landscape, appealing to a diverse range of players seeking entertainment, excitement, and potentially substantial rewards.

Emergence of live casinos

Amidst the evolution of online gambling, a remarkable phenomenon has taken center stage – the emergence of live casinos. These innovative platforms bridge the gap between the virtual and physical casino experience by offering players the chance to engage in real-time games, interact with professional dealers, and relish the atmosphere of a traditional casino, all through the screen of their device.

Powered by high-definition streaming technology, live casinos bring authenticity and social interaction to online gaming, offering players the thrill of participating in games like blackjack, roulette, and baccarat with the added comfort and convenience of playing from their chosen environment. This fusion of cutting-edge technology and classic casino games has captured the imagination of players, setting the stage for a new era of immersive gambling entertainment.

Introducing the 747 Live Casino – A new dimension to online gaming

Step into the future of online gaming with the introduction of the 747 Live Casino, where innovation meets excitement in a truly unparalleled way. This groundbreaking platform redefines the virtual casino experience, elevating it to new heights of realism and engagement. The 747 Live Casino 747live.casino brings players an immersive world of interactive gameplay, combining state-of-the-art technology with the charm of live dealers, all within the digital realm. With an array of classic and contemporary casino games to choose from, this platform opens the door to an entirely fresh dimension of entertainment, promising players an unforgettable journey into the world of online gaming like never before.

Table of Contents

The 747 Live Casino Experience

Cutting-edge technology for real-time gaming

At the heart of the 747 Live Casino experience lies cutting-edge technology that transforms the way players engage in real-time gaming. Powered by advanced streaming and communication systems, this platform seamlessly connects players with live dealers, ensuring uninterrupted and high-quality interactions. The incorporation of high-definition video feeds, real-time chat functionalities, and responsive user interfaces guarantees an immersive and dynamic experience. Whether enjoying a hand of poker or placing bets on the roulette wheel, players are transported into a realm where innovation and interactivity converge, making every moment at the 747 Live Casino a thrilling and technologically advanced adventure.

Professional dealers and immersive atmosphere

Immerse yourself in the captivating atmosphere of the 747 Live Casino, where the magic of a traditional casino comes alive through the expertise of professional dealers. These skilled and charismatic dealers not only bring a touch of authenticity to every game but also foster a sense of camaraderie that enhances the overall gaming experience. Their mastery of the games and engaging interactions create a virtual environment that mirrors the ambiance of a brick-and-mortar casino. From the shuffle of cards to the spin of the roulette wheel, the 747 Live Casino captures the essence of a physical casino, all while allowing players to enjoy it from the comfort of their own space.

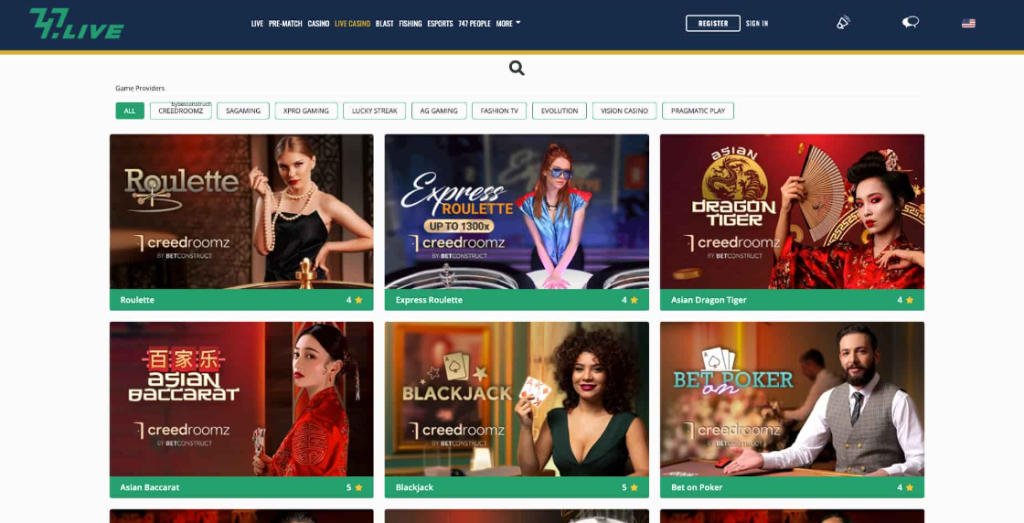

Variety of classic and innovative casino games

Dive into a world of endless entertainment at the 747 Live Casino, where a rich tapestry of both classic and innovative casino games awaits. From timeless favorites like blackjack and roulette to modern twists on traditional games, this platform offers an unparalleled selection designed to cater to every player’s preferences. Experience the thrill of high-stakes poker tournaments, try your luck at the spinning reels of cutting-edge video slots, or challenge the dealer in a game of baccarat. With a diverse array of games that cater to both seasoned players and newcomers, the 747 Live Casino ensures that every visit is a journey of discovery and excitement, promising endless possibilities and unforgettable moments.

Seamless User Interaction

User-friendly interface and navigation

Navigating the 747 Live Casino is a breeze, thanks to its user-friendly interface designed to enhance the player’s experience. Seamlessly blending functionality with aesthetics, the platform’s intuitive layout ensures that players can effortlessly find their favorite games, manage their accounts, and access vital information.

With easy-to-use menus, quick links, and responsive design, players can swiftly transition from one game to another without any hassle. Whether you’re a seasoned gambler or new to the world of online casinos, the 747 Live Casino’s user-friendly interface ensures that everyone can enjoy a smooth and enjoyable gaming journey.

Real-time chat and interaction with dealers

Experience a new level of engagement at the 747 Live Casino through real-time chat and direct interaction with professional dealers. Gone are the days of static gameplay – this platform empowers players to communicate with dealers in real-time, fostering a sense of community and camaraderie. Whether seeking advice, celebrating a win, or simply enjoying a friendly conversation, the chat feature adds a social dimension to the gaming experience. This interactive element not only enhances the authenticity of the casino environment but also creates a connection that bridges the gap between the digital world and the thrill of live gaming.

Personalized settings for individual preferences

The 747 Live Casino is committed to tailoring every player’s experience to their individual preferences, and this commitment is exemplified through its personalized settings. With a range of customizable options, players can fine-tune aspects of their gameplay environment to match their unique style. From adjusting audio and visual settings to setting betting limits and choosing preferred game variations, the platform empowers players to create a gaming space that feels just right for them. Whether you’re seeking a serene backdrop for strategic card games or a lively ambiance for fast-paced slots, the personalized settings at the 747 Live Casino ensure that each player can curate their ideal gaming atmosphere.

Safety and Security

Advanced encryption and data protection

Security is paramount at the 747 Live Casino, evident through its implementation of advanced encryption and stringent data protection measures. The platform employs cutting-edge encryption technology to safeguard players’ sensitive information, ensuring that personal and financial data remain confidential and secure. With the ever-present concern of cyber threats, the 747 Live Casino goes the extra mile to provide players with peace of mind, creating a safe environment for enjoying their gaming experience. This commitment to security establishes the foundation for trust between the platform and its players, making it a reliable and responsible choice in the realm of online gaming.

Fair play ensured through live streaming

At the 747 Live Casino, fair play is not just a promise but a reality, ensured through the power of live streaming. Transparency is at the core of the platform’s philosophy, as every move of the game unfolds in real-time through high-definition video feeds. Players can witness every shuffle of the deck, every spin of the wheel, and every draw of the cards, leaving no room for doubts about the authenticity of the outcomes. This innovative approach to gaming eliminates concerns about algorithmic randomness and instills confidence in the integrity of each game. With live streaming, the 747 Live Casino embraces a level of accountability that brings a new dimension to the concept of fair play in online gambling.

Secure payment options for hassle-free transactions

At the 747 Live Casino, convenience and security intersect seamlessly, as the platform offers a range of secure payment options for hassle-free transactions. Recognizing the importance of trusted financial processes, the casino ensures that players can deposit and withdraw funds with ease and confidence. By implementing robust encryption and adhering to industry-standard security protocols, the platform safeguards financial information throughout the payment process. Whether players choose credit cards, e-wallets, or other preferred methods, the 747 Live Casino prioritizes the protection of sensitive financial data, allowing players to focus on their gaming experience without any apprehensions about their transactions’ safety.

The Future of Online Gambling

Impact of 747 Live Casino on the industry

The introduction of the 747 Live Casino marks a significant turning point in the online gambling industry. Its fusion of cutting-edge technology, immersive gameplay, and authentic interaction has set a new benchmark for online casinos worldwide.

As players increasingly seek heightened realism and engagement in their gaming experiences, the 747 Live Casino’s innovative approach is poised to redefine industry standards. This platform not only caters to the desires of traditional casino enthusiasts but also attracts a broader audience, drawn by the allure of a dynamic and interactive gaming environment. Its impact extends beyond just a new way of playing; it represents a glimpse into the future of online gambling, where advanced technology meets the thrill of casino entertainment in ways that were once unimaginable.

Evolution of live casinos for an authentic experience

The evolution of live casinos has undergone a remarkable transformation, culminating in the authentic experience offered by platforms like the 747 Live Casino. From their early beginnings as simple webcam broadcasts of casino games, live casinos have evolved into sophisticated ecosystems that replicate the ambiance and interactivity of brick-and-mortar casinos. The incorporation of advanced streaming technology, high-definition visuals, and professional dealers has revolutionized the way players engage with their favorite games. The 747 Live Casino stands as a testament to this evolution, showcasing how innovation has bridged the gap between virtual and real-world gambling. As these platforms continue to advance, players can anticipate even greater levels of immersion, authenticity, and engagement, redefining the boundaries of what’s possible in the world of online gaming.

Anticipating further advancements in technology

The rapid evolution of online gaming and live casinos, exemplified by the 747 Live Casino, offers a mere glimpse into the boundless potential of technological advancements in this field. As technology continues to progress at an astonishing rate, players can look forward to an exciting future characterized by even more immersive experiences. Anticipated advancements include enhanced virtual reality integration, allowing players to step into a fully interactive 3D casino environment from the comfort of their homes. Moreover, artificial intelligence-driven enhancements might lead to more personalized gameplay, where games adapt to individual preferences and skill levels. The 747 Live Casino is just the beginning, and the horizon holds a captivating array of possibilities that promise to elevate the online gaming experience to unprecedented heights, delighting players with innovations that redefine entertainment and engagement.

Conclusion

Elevating online gambling to new heights

The emergence of platforms like the 747 Live Casino represents a pivotal moment in the world of online gambling, propelling the industry to new heights of sophistication and engagement. Gone are the days of static virtual gaming; the 747 Live Casino’s blend of cutting-edge technology and authentic interactions has transcended traditional boundaries, offering players an experience that rivals the excitement of physical casinos. By fusing real-time gameplay, professional dealers, and personalized settings, this platform has successfully captured the essence of casino entertainment while adding a layer of convenience that online gaming enthusiasts seek. As the 747 Live Casino paves the way for an era of dynamic and interactive gambling, it signals a transformative shift that will inevitably inspire other online casinos to elevate their offerings, enriching the entire landscape of digital gaming.

Embracing the convenience and excitement of 747 Live Casino

Embracing the 747 Live Casino signifies embracing a new paradigm of convenience and excitement in the realm of online gaming. This innovative platform brings the thrill of live casinos directly to players’ screens, allowing them to revel in the fervor of casino classics from the comfort of their chosen space. With the ability to interact with professional dealers in real-time, personalized settings that cater to individual preferences, and an extensive array of games, the 747 Live Casino redefines how players experience the captivating world of gambling. By seamlessly combining cutting-edge technology with the allure of traditional casino ambiance, this platform offers a seamless blend of convenience and exhilaration, creating a gaming haven that’s as alluring as it is convenient.

Join the revolution in live online gaming.

Step into the forefront of a gaming revolution by joining the ranks of live online gaming enthusiasts. With the advent of groundbreaking platforms like the 747 Live Casino, the traditional boundaries of online entertainment are being reshaped, offering players an unprecedented level of engagement and authenticity. By immersing yourself in real-time interactions, professional dealers, and an expansive selection of games, you can experience the thrill of the casino floor without leaving your own space. Don’t miss out on the chance to be a part of this transformative movement in gaming – embrace the revolution and elevate your online gaming experience to thrilling new heights.

What is the 747 Live Casino?

The 747 Live Casino is an innovative online gaming platform that merges cutting-edge technology with the excitement of live casino games. It offers players an authentic and immersive casino experience by providing real-time gameplay, professional dealers, and a wide range of classic and innovative casino games.

How does the 747 Live Casino work?

The 747 Live Casino uses advanced streaming technology to broadcast live dealers and gameplay to players’ devices in real-time. Players can interact with dealers through a chat feature, place bets, and engage in various casino games just as they would in a physical casino.

What games are available at the 747 Live Casino?

The 747 Live Casino offers a diverse selection of casino games, including classic options like blackjack, roulette, and baccarat, as well as modern twists on traditional games. Players can also expect to find poker, slots, and other exciting variations to cater to different preferences.

Can I interact with dealers in real-time?

Yes, the 747 Live Casino allows players to interact with professional dealers in real-time through a chat feature. This feature adds a social dimension to the gaming experience, creating a more engaging and authentic atmosphere.

How secure are the transactions and my personal information?

The 747 Live Casino takes security seriously. It employs advanced encryption technology to protect players’ sensitive data and offers a range of secure payment options for deposits and withdrawals, ensuring that financial transactions are safe and reliable.